The REA Approach to Database Modeling:Data Warehousing

Data Warehousing

Data warehousing is one of the fastest growing IT issues for businesses today. Not surprisingly, data warehousing functionality is being incorporated into all leading ERP systems. A data warehouse is a relational or multidimensional database that may consume hundreds of gigabytes or even terabytes of disk storage. When the data warehouse is organized for a single department or function, it is often called a data mart. Rather than containing hundreds of gigabytes of data for the entire enterprise, a data mart may have only tens of gigabytes of data. Other than size, we make no distinction between a data mart and a data warehouse. The issues discussed in this section apply to both.

The process of data warehousing involves extracting, converting, and standardizing an organization’s operational data from ERP and legacy systems and loading it into a central archive—the data warehouse. Once loaded into the warehouse, data are accessible via various query and analysis tools that are used for data mining. Data mining, which was introduced in Chapter 8, is the process of selecting, exploring, and modeling large amounts of data to uncover relationships and global patterns that exist in large databases but are hidden among the vast number of facts. This involves sophisticated techniques that use database queries and artificial intelligence to model real-world phenomena from data collected from the warehouse.

Most organizations implement a data warehouse as part of a strategic IT initiative that involves an ERP system. Implementing a successful data warehouse involves installing a process for gathering data on an ongoing basis, organizing it into meaningful information, and delivering it for evaluation. The data warehousing process has the following essential stages:3

• Modeling data for the data warehouse

• Extracting data from operational databases

• Cleansing extracted data

• Transforming data into the warehouse model

• Loading the data into the data warehouse database

MODELING DATA FOR THE DATA WAREHOUSE

Chapters 9 and 10 stressed the importance of data normalization to eliminate three serious anomalies: the update, insertion, and deletion anomalies. Normalizing data in an operational database is necessary to reflect accurately the dynamic interactions among entities. Data attributes are constantly updated, new attributes are added, and obsolete attributes are deleted on a regular basis. Even though a fully normalized database will yield the flexible model needed for supporting multiple users in this dynamic operational environment, it also adds to complexity in that it translates into performance inefficiency.

The Warehouse Consists of Denormalized Data

Because of the vast size of a data warehouse, such inefficiency can be devastating. A three-way join between tables in a large data warehouse may take an unacceptably long time to complete and may be unnecessary. In the data warehouse model, the relationship among attributes does not change. Because historical data are static in nature, nothing is gained by constructing normalized tables with dynamic links.

For example, in an operational database system, Product X may be an element of work-in-process (WIP) in Department A this month and part of Department B’s WIP next month. In a properly normalized data model, it would be incorrect to include Department A’s WIP data as part of a Sales Order table that records an order for Product X. Only the product item number would be included in the Sales Order table as a foreign key linking it to the Product table. Relational theory would call for a join (link) between the Sales Order table and Product table to determine the production status (that is, which department the product is currently in) and other attributes of the product. From an operational perspective, complying with relational theory is important because the relation changes as the product moves through different departments over time. Relational theory does not apply to a data warehousing system because the Sales Order/Product relation is stable.

Wherever possible, therefore, normalized tables pertaining to selected events may be consolidated into denormalized tables. Figure 11-6 illustrates how sales order data are reduced to a single denormalized Sales Order table for storage in a data warehouse system.

EXTRACTING DATA FROM OPERATIONAL DATABASES

Data extraction is the process of collecting data from operational databases, flat files, archives, and external data sources. Operational databases typically need to be out of service when data extraction occurs to avoid data inconstancies. Because of their large size and the need for a speedy transfer to minimize the downtime, little or no conversion of data occurs at this point. A technique called changed data capture can dramatically reduce the extraction time by capturing only newly modified data. The extraction soft- ware compares the current operational database with an image of the data taken at the last transfer of data to the warehouse. Only the data that have changed in the interim are captured.

Extracting Snapshots versus Stabilized Data

Transaction data stored in the operational database go through several stages as economic events unfold. For example, a sales transaction first undergoes credit approval, then the product is shipped, then billing occurs, and finally payment is received. Each of these events changes the state of the transaction and associated accounts such as inventory, accounts receivable, and cash.

A key feature of a data warehouse is that the data contained in it are in a nonvolatile, stable state. Typically, transaction data are loaded into the warehouse only when the activity on them has been completed. Potentially important relationships between entities may, however, be absent from data that are captured in this stable state. For example, information about canceled sales orders will probably not be reflected among the sales orders that have been shipped and paid for before they are placed in the warehouse. One way to reflect these dynamics is to extract the operations data in slices of time. These slices provide snap- shots of business activity. For example, decision makers may want to observe sales transactions approved, shipped, billed, and paid at various points in time along with snapshots of inventory levels at each state. Such data may be useful in depicting trends in the average time taken to approve credit or ship goods that might help explain lost sales.

CLEANSING EXTRACTED DATA

Data cleansing involves filtering out or repairing invalid data prior to being stored in the warehouse. Operational data are dirty for many reasons. Clerical, data entry, and computer program errors can create illogical data such as negative inventory quantities, misspelled names, and blank fields. Data cleansing also involves transforming data into standard business terms with standard data values. Data are often combined from multiple systems that use slightly different spellings to represent common terms, such as

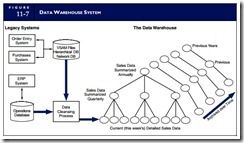

cust, cust_id, or cust_no. Some operational systems may use entirely different terms to refer to the same entity. For example, a bank customer with a certificate of deposit and an outstanding loan may be called a lender by one system and a borrower by another. The source application may use cryptic or difficult- to-understand terms for a number of reasons. For example, some older legacy systems were designed at a time when programming rules placed severe restrictions on naming and formatting data attributes. Also, a commercial application may assign attribute names that are too generic for the needs of the data ware- house user. Businesses that purchase commercial data, such as competitive performance information or market surveys, need to extract data from whatever format the external source provides and reorganize them according to the conventions used in the data warehouse. During the cleansing process, therefore, the attributes taken from multiple systems need to be transformed into uniform, standard business terms. This tends to be an expensive and labor-intensive activity, but one that is critical in establishing data in- tegrity in the warehouse. Figure 11-7 illustrates the role of data cleansing in building and maintaining a data warehouse.

TRANSFORMING DATA INTO THE WAREHOUSE MODEL

A data warehouse is composed of both detail and summary data. To improve efficiency, data can be transformed into summary views before they are loaded into the warehouse. For example, many decision makers may need to see product sales figures summarized weekly, monthly, quarterly, or annually. It may not be practical to summarize information from detail data every time the user needs it. A data ware- house that contains the most frequently requested summary views of data can reduce the amount of processing time during analysis. Referring again to Figure 11-7, we see the creation of summary views over time. These are typically created around business entities such as customers, products, and suppliers. Unlike operational views, which are virtual in nature with underlying base tables, data warehouse views are physical tables. Most OLAP software will, however, permit the user to construct virtual views from detail data when one does not already exist.

A data warehouse will often provide multiple summary views based on the same detailed data such as customers or products. For example, several different summary views may be generated from sales order detail data. These may include summaries by product, customer, and region. From such views, an analyst can drill down into the underlying detail data. Many business problems require a review of detail data to fully evaluate a trend, pattern, or anomaly exhibited in the summarized reports. Also, a single anomaly in detail data may manifest itself differently in different summary views.

LOADING THE DATA INTO THE DATA WAREHOUSE DATABASE

Most organizations have found that data warehousing success requires that the data warehouse be created and maintained separately from the operational (transaction processing) databases. This point is developed further in the next sections.

Internal Efficiency

One reason for a separate data warehouse is that the structural and operational requirements of transaction processing and data mining systems are fundamentally different, making it impractical to keep both operational (current) and archive data in the same database. Transaction processing systems need a data structure that supports performance, whereas data mining systems need data organized in a manner that permits broad examination and the detection of underlying trends.

Integration of Legacy Systems

The continued influence of legacy systems is another reason that the data warehouse needs to be independent of operations. A remarkably large number of business applications continue to run in the main- frame environment of the 1970s. By some estimates, more than 70 percent of business data for large corporations still resides in the mainframe environment. The data structures these systems employ are of- ten incompatible with the architectures of modern data mining tools. Hence, transaction data that are stored in navigational databases and Virtual Storage Access Method systems often end up in large tape libraries that are isolated from the decision process. A separate data warehouse provides a venue for integrating the data from legacy and contemporary systems into a common structure that supports entity-wide analysis.

Consolidation of Global Data

Finally, the emergence of the global economy has brought about fundamental changes in business organizational structure and has profoundly changed the information requirements of business entities. Unique business complexities challenge decision makers in the global corporation. For example, they need to assess the profitability of products built and sold in multiple countries with volatile currencies. Such challenges add complexity to data mining. A separate centralized data warehouse is an effective means of collecting, standardizing, and assimilating data from diverse sources.

In conclusion, the creation of a data warehouse separate from operational systems is a fundamental

data warehousing concept. Many organizations now consider data warehouse systems to be key components of their information systems strategy. As such, they allocate considerable resources to build data warehouses concurrently with the operational systems being implemented.

DECISIONS SUPPORTED BY THE DATA WAREHOUSE

By making the data warehouse as flexible and friendly as possible, it becomes accessible by many end users. Some decisions that a data warehouse supports are not fundamentally different from those that traditional databases support. Other information uses, such as multidimensional analysis and information visualization, are not possible with traditional systems. Some users of the data warehouse need routine reports based on traditional queries. When standard reports can be anticipated in advance, they can be provided automatically as a periodic product. Automatic generation of standard information reduces access activity against the data warehouse and will improve its efficiency in dealing with more esoteric needs.

Drill-down capability is a useful data analysis technique associated with data mining. Drill-down analysis begins with the summary views of data described previously. When anomalies or interesting trends are observed, the user drills down to lower-level views and ultimately into the underlying detail data.

Obviously, such analysis cannot be anticipated like a standard report. Drill-down capability is an OLAP feature of data mining tools available to the user. Tools for data mining are evolving rapidly to satisfy the decision maker’s need to understand the business unit’s behavior in relation to key entities including customers, suppliers, employees, and products. Standard reports and queries produced from summary views can answer many what questions, but drill-down capability answers the why and how questions. Table 11-1 summarizes some of the applications of data mining in decision support.

SUPPORTING SUPPLY CHAIN DECISIONS FROM THE DATA WAREHOUSE The primary reason for data warehousing is to optimize business performance. Many organizations believe that more strategic benefit can be gained by sharing data externally. By providing customers and suppliers with the information they need when they need it, the company can improve its relationships and provide better service. The potential gain to the giving organization is seen in a more responsive and efficient supply chain. Using Internet technologies and OLAP applications, an organization can share its data warehouse with its trading partners and, in effect, treat them like divisions of the firm. A few examples of this approach are outlined in the following extract.4

Western Digital Corporation, a leading manufacturer of hard drives, plans to grant certain suppliers access to its data warehouse so suppliers can view performance data on their parts. Because Western Digital maintains a limited engineering staff, the company relies on its suppliers to act as strategic partners in product development. Providing suppliers with performance data allows them to make improvements and participate in the engineering process. The suppliers improve their parts, which in turn improves Western Digital’s products.

The company’s data warehouse holds more than 600 gigabytes of raw data collected from more than 100,000 drives that it manufactures each day. Approximately 800 attributes are collected on each drive, which can be analyzed using OLAP software. The systems feeding the warehouse include ERP applications, data from trouble-call centers, data from failure-analysis systems, and field test data from customer sites and service centers. The company routinely searches the data warehouse for failure in- formation on every drive that it manufactures. All failures and their causes can be linked back to the supplier.

The General Motors (GM) supply-chain data warehouse is available via the Web to more than 5,000 suppliers worldwide. Suppliers can log on to a secure Web site and query information on the quantities of supplies shipped, delivery times, and prices. This information will help GM suppliers optimize their product planning, ability to source materials, and shipping-fulfillment processes.

MIM Health Plans Inc., an independent pharmacy benefits management company, lets its customers view warehouse data to promote better buying decisions. For instance, benefits managers can view reports and drill down into the warehouse to see claims costs, overall costs, the number of prescriptions ordered in a given time period, the number of brand versus generic drugs, and other decision metrics.

Comments

Post a Comment