Construct, Deliver and Maintain Systems Project:Construct the System

Construct the System

The main goal of the construct phase is to design and build working software that is ready to be tested and delivered to its user community. This phase involves modeling the system, programming the applications, and application testing. The design and programming of modern systems follow one of two basic approaches: the structured approach and the object-oriented approach. We begin this section with a review of these competing methodologies. We then examine construct issues related to system design, programming, and testing.

THE STRUCTURED DESIGN APPROACH

The structured design approach is a disciplined way of designing systems from the top down. It consists of starting with the big picture of the proposed system that is gradually decomposed into more and more detail until it is fully understood. Under this approach the business process under design is usually documented by data flow and structure diagrams. Figure 14-6 shows the use of these techniques to depict the top-down decomposition of a hypothetical business process.

We can see from these diagrams how the systems designer follows a top-down approach. The designer starts with an abstract description of the system and, through successive steps, redefines this view to pro- duce a more detailed description. In our example, Process 2.0 in the context diagram is decomposed into an intermediate-level data flow diagram (DFD). Process 2.3 in the intermediate DFD is further decomposed into an elementary DFD. This decomposition could involve several levels to obtain sufficient details. Let’s assume that three levels are sufficient in this case. The final step transforms Process 2.3.3 into a structure diagram that defines the program modules that will constitute the process.

THE OBJECT-ORIENTED DESIGN APPROACH

The object-oriented design approach is to build information systems from reusable standard components or objects. This approach may be equated to the process of building an automobile. Car manufacturers do not create each new model from scratch. New models are actually built from standard components that also go into other models. For example, each model of car that a particular manufacturer produces may use the same type of engine, gearbox, alternator, rear axle, radio, and so on. Some of the car’s components will be industry-standard products that other manufacturers use. Such things as wheels, tires, spark plugs, and headlights fall into this category. In fact, it may be that the only component actually created from scratch for a new car model is the body.

The automobile industry operates in this fashion to stay competitive. By using standard components, car manufacturers minimize production and maintenance costs. At the same time, they can remain responsive to consumer demands for new products and preserve manufacturing flexibility by mixing and match- ing components according to the customer’s specification.

The concept of reusability is central to the object-oriented design approach to systems design. Once created, standard modules can be used in other systems with similar needs. Ideally, the systems professionals of the organization will create a library (inventory) of modules that other systems designers within the firm can use. The benefits of this approach are similar to those stated for the automobile example. They include reduced time and cost for development, maintenance, and testing and improved user support and flexibility in the development process.

Elements of the Object-Oriented Design Approach

A distinctive characteristic of the object-oriented design approach is that both data and programming logic, such as integrity tests, accounting rules, and updating procedures, are encapsulated in modules to represent objects. The following discussion deals with the principal elements of the object-oriented approach.

OBJECTS. Objects are equivalent to nouns in the English language. For example, vendors, customers, inventory, and accounts are all objects. These objects possess two characteristics: attributes and methods.

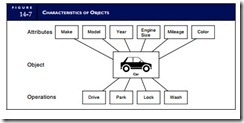

Attributes are the data that describe the objects. Methods are the actions that are performed on or by objects that may change their attributes. Figure 14-7 illustrates these characteristics with a nonfinancial example. The object in this example is an automobile whose attributes are make, model, year, engine size, mileage, and color. Methods that may be performed on this object include drive, park, lock, and wash. Note that if we perform a drive method on the object, the mileage attribute will be changed.

Figure 14-8 illustrates these points with an inventory accounting example. In this example, the object is inventory and its attributes are part number, description, quantity on hand, reorder point, order quantity, and supplier number. The methods that may be performed on inventory are reduce inventory (from product sales), review available quantity on hand, reorder inventory (when quantity on hand is less than the reorder point), and replace inventory (from inventory receipts). Again, note that performing any of the methods will change the attribute quantity on hand.

CLASSES AND INSTANCES. An object class is a logical grouping of individual objects that share the same attributes and methods. An instance is a single occurrence of an object within a class. For example, Figure 14-9 shows the inventory class consisting of several instances or specific inventory types.

INHERITANCE. Inheritance means that each object instance inherits the attributes and methods of the class to which it belongs. For example, all instances within the inventory class hierarchy share the attributes of part number, description, and quantity on hand. These attributes would be defined once and only once for the inventory object. Thus, the object instances of wheel bearing, water pump, and alternator will inherit these attributes. Likewise, these instances will inherit the methods (reduce, review, reorder, and replace) defined for the class.

Object classes can also inherit from other object classes. For example, Figure 14-10 shows an object hierarchy made up of an object class called control and three subclasses called accounts payable, accounts receivable, and inventory. This diagramming technique is an example of unified modeling language (UML). The object is represented as a rectangle with three levels: name, attributes, and methods.

The three object subclasses have certain control methods in common. For example, no account should be updated without first verifying the values Vendor Number, Customer Number, or Part Number. This method (and others) may be specified for the control object (once and only once), and then all the sub- class objects to which this method applies inherit it.

Because object-oriented designs support the objective of reusability, portions of systems, or entire systems, can be created from modules that already exist. For example, any future system that requires the attributes and methods that the existing control module specifies can inherit them by being designated a subclass object.

Finally, the object-oriented approach offers the potential of increased security over the structured model. Each object’s collection of methods, which creates an impenetrable wall of code around the data, determines its functionality (behavior). This means that the internal data of the object can be manipulated only by its methods. Direct access to the object’s internal structure is not permitted.

SYSTEM DESIGN

The purpose of the design phase is to produce a detailed description of the proposed system that both sat- isfies the system requirements identified during systems analysis and is in accordance with the conceptual design. In this phase, all system components—user views, database tables, processes, and controls—are meticulously specified. At the end of this phase, these components are presented formally in a detailed design report. This report constitutes a set of blueprints that specify input screen formats, output report layouts, database structures, and process logic. These completed plans then proceed to the final phase in the SDLC—system implementation—in which the system is physically constructed.

The Design Sequence

The systems design phase of the SDLC follows a logical sequence of events: create a data model of the business process, define conceptual user views, design the normalized database tables, design the physical user views (output and input views), develop the process modules, specify the system controls, and perform a system walk-through. In this section, each of the design steps is examined in detail.

An Iterative Approach

Typically, the design sequence listed in the previous section is not a purely linear process. Inevitably, sys- tem requirements change during the detailed design phase, causing the designer to revisit previous steps. For example, a last-minute change in the process design may influence data collection requirements that, in turn, changes the user view and requires alterations to the database tables.

To deal with this material as concisely and clearly as possible, the detailed design phase is presented here as a neat linear process. However, the reader should recognize its circular nature. This characteristic has control implications for both accountants and management. For example, a control issue that was previously resolved may need to be revisited as a result of modifications to the design.

DATA MODELING, CONCEPTUAL VIEWS, AND NORMALIZED TABLES

Data modeling is the task of formalizing the data requirements of the business process as a conceptual model. The primary documentation instrument used for data modeling is the entity relationship (ER) dia- gram. This technique is used to depict the entities or data objects in the system. Once the entities have been represented in the data model, the data attributes that define each entity can then be described. They should be determined by careful analysis of user needs and may include both financial and nonfinancial data. These attributes represent the conceptual user views that must be supported by normalized database tables. To the extent that the data requirements of all users have been properly specified in the data model, the resulting databases will support multiple user views. We described data modeling, defining user views, and designing normalized base tables in Chapter 9 and its appendix.

DESIGN PHYSICAL USER VIEWS

The physical views are the media for conveying and presenting data. These include output reports, documents, and input screens. The remainder of this section deals with a number of issues related to the design of physical user views. The discussion examines output and input views separately.

Design Output Views

Output is the information the system produces to support user tasks and decisions. Table 14-1 presents examples of output that several AIS subsystems produce. At the transaction processing level, output tends to be extremely detailed. Revenue and expenditure cycle systems produce control reports for lower-level management and operational documents to support daily activities. Conversion cycle systems produce reports for scheduling production, managing inventory, and cost management. These systems also pro- duce documents for controlling the manufacturing process.

The general ledger/financial reporting system (GL/FRS) and the management reporting system (MRS) produce output that is more summarized. The intended users of these systems are management, stock- holders, and other interested parties outside the firm. The GL/FRS is a nondiscretionary reporting system

that produces formal reports required by law. These include financial statements, tax returns, and other reports that regulatory agencies demand. The output requirements of the GL/FRS tend to be predictable and stable over time and between organizations.

The management reporting system (MRS) serves the needs of internal management users. MRS applications may be stand-alone systems, or they may be integrated in the revenue, conversion, and expenditure cycles to produce output that contains both financial and nonfinancial information. The MRS produces problem-specific reports that vary considerably between business entities.

OUTPUT ATTRIBUTES. Regardless of their physical form, whether operational documents, financial statements, or discretionary reports, output views should possess the following attributes: relevance, summarization, exceptions orientation, timeliness, accuracy, completeness, and conciseness.

RELEVANCE. Each element of information output must support the user’s decision or task. Irrelevant facts waste resources and detract attention from the information content of the output. Output documents that contain unnecessary facts tend to be cluttered, take time to process, cause bottlenecks, and promote errors.

SUMMARIZATION. Reports should be summarized according to the level of the user in the organization. The degree of summarization increases as information flows upward from lower-level manag- ers to top management. We see this characteristic clearly in the responsibility reports represented in Figure 14-11.

EXCEPTION ORIENTATION. Operations control reports should identify activities that are about to go out of control and ignore those that are functioning within normal limits. This allows managers to focus their attention on areas of greatest need. An example of this is illustrated with the inventory reorder report presented in Figure 14-12. Only the items that need to be ordered are listed on the report.

TIMELINESS. Timely information that is reasonably accurate and complete is more valuable than per- fect information that comes too late to be useful. Therefore, the system must provide the user with information that is timely enough to support the desired action.

ACCURACY. Information output must be free of material errors. A material error is one that causes the user to take an incorrect action or to fail to take the correct action. Operational documents and low-level control reports usually require a high degree of accuracy. However, for certain planning reports and reports that support rapid decision making, the system designer may need to sacrifice accuracy to produce information that is timely. Managers cannot always wait until they have all the facts before they must act. The designer must seek a balance between the competing needs for accuracy and timeliness when designing output reports.

CONCISENESS. Information output should be presented as concisely as possible within the report or document. Output should use coding schemes to represent complex data classifications. Also, information should be clearly presented with titles for all values. Reports should be visually pleasing and logically organized.

OUTPUT REPORTING TECHNIQUES. While recognizing that differences in cognitive styles exist among managers, systems designers must determine the output type and format most useful to the user. Some managers prefer output that presents information in tables and matrices. Others prefer information that is visually oriented in the form of graphs and charts. The issue of whether the output should be hard copy (paper) or electronic must also be addressed.

Despite predictions for two decades or more, we have not yet achieved a paperless society. On the contrary, trees continue to be harvested and paper mills continue to be productive. In some firms, top management receives hundreds of pages of paper output each day. Paper documents also continue to flow at lower organizational levels.

On the other hand, many firms are moving to paperless audit trails and support daily tasks with electronic documents. Insurance companies, law firms, and mortgage companies make extensive use of electronic documents. The use of electronic output greatly reduces or eliminates the problems associated with paper documents (purchasing, handling, storage, and disposal). However, the use of electronic output has obvious implications for accounting and auditing.

The query and report-generating features of modern database management systems permit the manager to quickly create standard and customized output reports. Custom reports can present information in different formats, including text, matrices, tables, and graphs. Section 1 in the chapter appendix examines these formats and provides some examples.

Design Input Views

Data input views are used to capture the relevant facts about the resources, events, and agents involved in business process transactions. In this section, we divide input into two classes: hard-copy input and electronic input.

DESIGN HARD-COPY INPUT. Businesses today still make extensive use of paper input documents. In designing hard copy documents, the system designer must keep in mind several aspects of the physical business process.

HANDLING. How will the document be handled? Will it be on the shop floor around grease and oil? How many hands must it go through? Is it likely to get folded, creased, or torn? Input forms are part of the audit trail and must be preserved in legible form. If they are to be subjected to physical abuse, they must be made of high-quality paper.

STORAGE. How long will the form be stored? What is the storage environment? Length of storage time and environmental conditions will influence the appearance of the form. Data entered onto poor-quality paper may fade under extreme conditions. Again, this may have audit trail implications. A related consideration is the need to protect the form against erasures.

NUMBERS OF COPIES. Source documents are often created in multiple copies to trigger multiple activities simultaneously and provide a basis for reconciliation. For example, the system may require that individual copies of sales orders go to the warehouse, the shipping department, billing, and accounts receivable. Manifold forms are often used in such cases. A manifold form produces several carbon copies from a single writing. The copies are normally color-coded to facilitate distribution to the correct users.

FORM SIZE. The average number of facts captured for each transaction affects the size of the form. For example, if the average number of items received from the supplier for each purchase is 20, the receiving report should be long enough to record them all. Otherwise, additional copies will be needed, which will add to the clerical work, clog the system, and promote processing errors.

Standard forms sizes are full-size, 8½ by 11 inches; and half-size, 8½ by 5½ inches. Card form standards are 8 by 10 inches and 8 by 5 inches. The use of nonstandard forms can cause handling and storage problems and should be avoided.

FORM DESIGN. Clerical errors and omissions can cause serious processing problems. Input forms must be designed to be easy to use and collect the data as efficiently and effectively as possible. This requires that forms be logically organized and visually comfortable to the user. Two techniques used in well-designed forms are zones and embedded instructions.

ZONES. Zones are areas on the form that contain related data. Figure 14-13 provides an example of a form divided into zones. Each zone should be constructed of lines, captions, or boxes that guide the user’s eye to avoid errors and omissions.

EMBEDDED INSTRUCTIONS. Embedded instructions are contained within the body of the form itself rather than on a separate sheet. It is important to place instructions directly in the zone to which they pertain. If an instruction pertains to the entire form, it should be placed at the top of the form. Instructions should be brief and unambiguous. As an instructive technique, active voice is stronger, more efficient (needing fewer words), and less ambiguous than passive voice. For example, the first instruction that follows is written in passive voice. The second is in active voice.

1. This form should be completed in ink.

2. Complete this form in ink.

Notice the difference: the second sentence is stronger, shorter, and clearer than the first; it is an instruction rather than a suggestion.

DESIGN ELECTRONIC INPUT. Electronic input techniques fall into two basic types: input from source documents and direct input. Figure 14-14 illustrates the difference in these techniques. Input from source documents involves the collection of data on paper forms that are then transcribed to electronic

forms in a separate operation. Direct input procedures capture data directly in electronic form, via terminals at the source of the transaction.

INPUT FROM SOURCE DOCUMENTS. Firms use paper source documents for a number of reasons. Some firms prefer to maintain a paper audit trail that goes back to the source of an economic event. Some companies capture data onto paper documents because direct input procedures may be inconvenient or

impossible. Other firms achieve economies of scale by centralizing electronic data collection from paper documents.

An important aspect of this approach is to design input screens that visually reflect the source document. The captions and data fields should be arranged on the electronic form exactly as they are on the source document. This minimizes eye movement between the source and the screen and maximizes throughput of work.

DIRECT INPUT. Direct data input requires that data collection technology be distributed to the source of the transaction. A very common example of this is the point-of-sale terminal in a department store.

An advantage of direct input is the reduction of input errors that plague downstream processing. By collecting data once, at the source, clerical errors are reduced because the subsequent transcription step associated with paper documents is eliminated. The more times a transaction is manually transcribed, the greater the potential for error.

Direct data collection uses intelligent forms for online editing that help the user complete the form and make calculations automatically. The input screen is attached to a computer that performs logical checks on the data being entered. This reduces input errors and improves the efficiency of the data collection procedures. During data entry, the intelligent form will detect transcription errors, such as illegal characters in a field, incorrect amounts, and invalid item numbers. A beep can be used to draw attention to an error, an illegal action, or a screen message. Thus, corrections to input can be made on the spot.

Given minimal input, an intelligent form can complete the input process automatically. For example, a sales clerk need enter in the terminal only the item numbers and quantities of products sold. The system will automatically provide the descriptions, prices, price extensions, taxes, and freight charges and calculate the grand total. Many time-consuming and error-prone activities are eliminated through this technique. Modern relational database packages have a screen painting feature that allows the user to quickly and easily create intelligent input forms.

DATA ENTRY DEVICES. A number of data entry devices are used to support direct electronic input. These include point-of-sale terminals, magnetic ink character recognition devices, optical character recognition devices, automatic teller machines, and voice recognition devices.

DESIGN THE SYSTEM PROCESS

Now that the database tables and user views for the system have been designed, we are ready to design the process component. This starts with the DFDs that were produced in the general design phase. Depending on the extent of the activities performed in the general design phase, the system may be specified at the context level or may be refined in lower-level DFDs. The first task is to decompose the existing DFDs to a degree of detail that will serve as the basis for creating structure diagrams. The structure diagrams will provide the blueprints for writing the actual program modules.

Decompose High-Level DFDs

To demonstrate the decomposition process, we will use the intermediate DFD of the purchases and cash disbursements system illustrated in Figure 14-15. This DFD was decomposed from the context-level DFD (Figure 13-6, Option A) originally prepared in the conceptual design phase. We will concentrate on the accounts payable process numbered 1.4 in the diagram. This process is not yet sufficiently detailed to produce program modules.

Figure 14-16 shows Process 1.4 decomposed into the next level of detail. Each of the resulting subpro- cesses is numbered with a third-level designator, such as 1.4.1, 1.4.2, 1.4.3, and so on. We will assume

that this level of DFD provides sufficient detail to prepare a structure diagram of program modules. Many CASE tools will automatically convert DFDs to structure diagrams. However, to illustrate the concept, we will go through the process manually.

Design Structure Diagrams

The creation of the structure diagram requires analysis of the DFD to divide its processes into input, process, and output functions. Figure 14-17 presents a structure diagram showing the program modules based on the DFD in Figure 14-16.

The Modular Approach

The modular approach presented in Figure 14-17 involves arranging the system in a hierarchy of small, discrete modules, each of which performs a single task. Correctly designed modules possess two attributes: (1) they are loosely coupled and (2) they have strong cohesion. Coupling measures the degree of interaction between modules. Interaction is the exchange of data between modules. A loosely coupled module is independent of the others. Modules with a great deal of interaction are tightly coupled. Figure 14-18 shows the relationship between modules in loosely coupled and tightly coupled designs.

In the loosely coupled system, the process starts with Module A. This module controls all the data flowing through the system. The other modules interact only with this module to send and receive data. Module A then redirects the data to other modules.

Cohesion refers to the number of tasks a module performs. Strong cohesion means that each module performs a single, well-defined task. Returning to Figure 14-17, Module D gets receiving reports and only that. It does not compare reports to open purchase orders, nor does it update accounts payable. Separate modules perform these tasks.

Modules that are loosely coupled and strongly cohesive are much easier to understand and easier to maintain. Maintenance is an error-prone process, and it is not uncommon for errors to be accidentally inserted into a module during maintenance. Thus, changing a single module within a tightly coupled structure can have an impact on the other modules with which it interacts. Look again at the tightly coupled structure in Figure 14-18. These interactions complicate maintenance by extending the process to the other modules. Similarly, modules with weak cohesion—those that perform several tasks—are more complex and difficult to maintain.

Pseudocode the System Modules

Each module in Figure 14-17 represents a separate computer program. The higher-level programs will communicate with lower-level programs through call commands. System modules are coded in the implementation phase. When we get to that phase, we will examine programming language options. At this point, the designer must specify the functional characteristics of the modules through other techniques.

Next, we illustrate how pseudocode may be used to describe the function of Module F in Figure 14-17. This module authorizes payment of accounts payable by validating the supporting documents.

COMPARE-DOCS (Module F) READ PR-RECORD FROM PR-FILE

READ PO-RECORD FROM PO-FILE READ RR-RECORD FROM RR-FILE

READ INVOICE-RECORD FROM INVOICE-FILE

IF ITEM-NUM, QUANTITY-RECEIVED, TOTAL AMOUNT IS EQUAL FOR ALL RECORDS THEN PLACE ‘‘Y’’ IN AUTHORIZED FIELD OF PO-RECORD

ELSE READ ANOTHER RECORD

The use of pseudocode for specifying module functions has two advantages. First, the designer can express the detailed logic of the module, regardless of the programming language to be used. Second, although the end user may lack programming skills, he or she can be actively involved in this technical but crucial step.

DESIGN SYSTEM CONTROLS

The last step in the design phase is the design of system controls. This includes computer processing controls, database controls, manual controls over input to and output from the system, as well as controls over the operational environment (for example, distributed data processing controls). In practice, many controls that are specific to a type of technology or technique will, at this point, have already been designed, along with the modules to which they relate. This step in the design phase allows the design team to review, modify, and evaluate controls with a system wide perspective that did not exist when each module was being designed independently. Because of the extensive nature of computer-based system controls, treatment of this aspect of the systems design is deferred to Chapters 15, 16, and 17, where they can be covered in depth.

PERFORM A SYSTEM DESIGN WALK-THROUGH

After completing the detailed design, the development team usually performs a system design walk- through to ensure the design is free from conceptual errors that could become programmed into the final system. Many firms have formal, structured walk-throughs that a quality assurance group conducts. This is an independent group of programmers, analysts, users, and internal auditors. The job of this group is to simulate the operation of the system to uncover errors, omissions, and ambiguities in the design. Most system errors emanate from poor designs rather than programming mistakes. Detecting and correcting errors in the design thus reduce costly reprogramming later.

Review System Documentation

The detailed design report documents and describes the system to this point. This report includes:

• Designs of all screen outputs, reports, and operational documents.

• ER diagrams describing the data relations in the system.

• Third normal form designs for database tables specifying all data elements.

• An updated data dictionary describing each data element in the database.

• Designs for all screen inputs and source documents for the system.

• Context diagrams for the overall system.

• Low-level data flow diagrams of specific system processes.

• Structure diagrams for the program modules in the system, including a pseudocode description of each module.

The quality control group scrutinizes these documents, and any errors are recorded in a walk-through report. Depending on the extent of the system errors, the quality assurance group will make a recommendation. The system design will be either accepted without modification, accepted subject to modification of minor errors, or rejected because of material errors.

At this point, a decision is made either to return the system for additional design or to proceed to the next phase—systems implementation. Assuming the design goes forward, the documents just mentioned constitute the blueprints that guide programmers and system designers in constructing the physical system.

PROGRAM APPLICATION SOFTWARE

The next stage of the in-house development is to select a programming language from among the various languages available and suitable to the application. These include procedural languages such as COBOL, event-driven languages such as Visual Basic, or object-oriented programming (OOP) languages such as Java or Cþþ. This section presents a brief overview of various programming approaches. Systems professionals will make their decision based on the in-house standards, architecture, and user needs.

Procedural Languages

A procedural language requires the programmer to specify the precise order in which the program logic is executed. Procedural languages are often called third-generation languages (3GLs). Examples of 3GLs include COBOL, FORTRAN, C, and PL1. In business (particularly in accounting) applications, COBOL was the dominant language for years. COBOL has great capability for performing highly detailed operations on individual data records and handles large files efficiently. On the other hand, it is an extremely wordy language that makes programming a time-consuming task. COBOL has survived as a viable language because many of the legacy systems written in the 1970s and 1980s, which were coded in COBOL, are still in operation today. Major retrofits and routine maintenance to these systems need to be coded in COBOL. More than 12 billion lines of COBOL code are executed daily in the United States.

Event-Driven Languages

Event-driven languages are no longer procedural. Under this model, the program’s code is not executed in a predefined sequence. Instead, external actions or events that the user initiates dictate the control flow of the program. For example, when the user presses a key or clicks on a computer icon on the screen, the program automatically executes code associated with that event. This is a fundamental shift from the 3GL era. Now, instead of designing applications that execute sequentially from top to bottom in accordance with the way the programmer thinks they should function, the user is in control.

Microsoft’s Visual Basic is the most popular example of an event-driven language. The syntax of the language is simple yet powerful. Visual Basic is used to create real-time and batch applications that can manipulate flat files or relational databases. It has a screen-painting feature that greatly facilitates the creation of sophisticated graphical user interfaces.

Object-Oriented Languages

Central to achieving the benefits of this approach is developing software in an object-oriented programming (OOP) language. The most popular true OOP languages are Java and Smalltalk. However, the learning curve of OOP languages is steep. The time and cost of retooling for OOP are the greatest impediments to the transition process. Most firms are not prepared to discard millions of lines of traditional COBOL code and retrain their programming staffs to implement object-oriented systems. Therefore, a compromise, intended to ease this transition, has been the development of hybrid languages, such as Object COBOL, Object Pascal, and Cþþ.

Programming the System

Regardless of the programming language used, modern programs should follow a modular approach. This technique produces small programs that perform narrowly defined tasks. The following three benefits are associated with modular programming.

PROGRAMMING EFFICIENCY. Modules can be coded and tested independently, which vastly reduces programming time. A firm can assign several programmers to a single system. Working in parallel, the programmers each design a few modules. These are then assembled into the completed system.

MAINTENANCE EFFICIENCY. Small modules are easier to analyze and change, which reduces the start-up time during program maintenance. Extensive changes can be parceled out to several programmers simultaneously to shorten maintenance time.

CONTROL. By keeping modules small, they are less likely to contain material errors of fraudulent logic. Because each module is independent of the others, errors are contained within the module.

SOFTWARE TESTING

Programs must be thoroughly tested before they are implemented. Program testing issues of direct concern to accountants are discussed in this section.

Testing Individual Modules

Programmers should test completed modules independently before implementing them. This usually involves the creation of test data. Depending on the nature of the application, this could include test trans- action files, test master files, or both. Figure 14-19 illustrates the test data approach. We examine this and several other testing techniques in detail in Chapter 17.

Assume the module under test is the Update AP Records program (Module H) represented in Figure 14-17. The approach taken is to test the application thoroughly within its range of functions. To do this, the programmer must create some test accounts payable master file records and test transactions. The transactions should contain a range of data values adequate to test the logic of the application, including both good and

bad data. For example, the programmer may create a transaction with an incorrect account number to see how the application handles such errors. The programmer will then compare the amounts posted to accounts payable records to see if they tally with precalculated results. Tests of all aspects of the logic are performed in this way, and the test results are used to identify and correct errors in the logic of the module.

Comments

Post a Comment