Systems Development,Program Changes, and Application Controls:Testing Computer Application Controls.

Testing Computer Application Controls

The appendix to Chapter 15 described how audit objectives are derived from management assertions such as existence or occurrence, completeness, accuracy, rights and obligations, valuation or allocation, and presentation and disclosure. Depending on the type of account being considered, a particular management assertion has different implications for the audit objective to be developed. Once developed, achieving the audit objectives requires designing audit procedures to gather evidence that either corroborates or refutes the underlying management assertions. Generally, this involves a combination of tests of application controls and substantive tests of transaction details and account balances.

This section deals essentially with the tests of application controls, but at the end we will briefly review techniques for performing substantive tests. Tests of computer application controls follow two general approaches: (1) the black box (around the computer) approach and (2) the white box (through the computer) approach. First, the black box approach is examined. Then, several white box testing techniques are reviewed.

BLACK BOX APPROACH

Auditors performing black box testing do not rely on a detailed knowledge of the application’s internal logic. Instead, they analyze flowcharts and interview knowledgeable personnel in the client’s organization to understand the functional characteristics of the application. With an understanding of what the application is supposed to do, the auditor tests the application by reconciling production input transactions processed by the application with output results. The output results are analyzed to verify the application’s compliance with its functional requirements. Figure 17-9 illustrates the black box approach.

The advantage of the black box approach is that the application need not be removed from service and tested directly. This approach is feasible for testing applications that are relatively simple. However, complex applications—those that receive input from many sources, perform a variety of complex operations, or produce multiple outputs—often require a more focused testing approach to provide the auditor with evidence of application integrity.

WHITE BOX APPROACH

The white box (through the computer) approach relies on an in-depth understanding of the internal logic of the application being tested. The white box approach includes several techniques for testing application logic directly. Typically these involve the creation of a small set of test transactions to verify specific aspects of an application’s logic and controls. In this way, auditors are able to conduct precise tests, with known variables, and obtain results that they can compare against objectively calculated results. The most common types of tests of controls include the following:

1. Authenticity tests, which verify that an individual, a programmed procedure, or a message (such as an electronic data interchange [EDI] transmission) attempting to access a system is authentic. Authenticity controls include user IDs, passwords, valid vendor codes, and authority tables.

2. Accuracy tests, which ensure that the system processes only data values that conform to specified tolerances. Examples include range tests, field tests, limit tests, and reasonableness tests.

3. Completeness tests, which identify missing data within a single record and entire records missing from a batch. The types of tests performed are field tests, record sequence tests, hash totals, and control totals.

4. Redundancy tests, which determine that an application processes each record only once. Redundancy controls include the reconciliation of batch totals, record counts, hash totals, and financial control totals.

5. Access tests, which ensure that the application prevents authorized users from unauthorized access to data. Access controls include passwords, authority tables, user-defined procedures, data encryption, and inference controls.

6. Audit trail tests, which ensure that the application creates an adequate audit trail. This includes evidence that the application records all transactions in a transaction log, posts data values to the appropriate accounts, produces complete transaction listings, and generates error files and reports for all exceptions.

7. Rounding error tests, which verify the correctness of rounding procedures. Rounding errors occur in accounting information when the level of precision used in the calculation is greater than that used in the reporting. For example, interest calculations on bank account balances may have a precision of five decimal places, whereas only two decimal places are needed to report balances. If the remaining three decimal places are simply dropped, the total interest calculated for the total number of accounts may not equal the sum of the individual calculations.

Figure 17-10 shows the logic for handling the rounding error problem. This technique uses an accumulator to keep track of the rounding differences between calculated and reported balances. Note how the

sign and the absolute value of the amount in the accumulator determine how rounding affects the customer account. To illustrate, the rounding logic is applied to three hypothetical bank balances (see Table 17-1). The interest calculations are based on an interest rate of 5.25 percent.

Failure to properly account for the rounding difference in the example can result in an imbalance between the total (control) figure and the sum of the detail figures for each account. Poor accounting for rounding differences can also present an opportunity for fraud.

SALAMI FRAUD. Rounding programs are particularly susceptible to the so-called salami fraud. This fraud tends to affect large numbers of victims, but each in a minimal way. The fraud scheme takes its name from the analogy of slicing a large salami (the fraud objective) into many thin pieces. Each victim gets one of these small pieces and is unaware of being defrauded. For example, a programmer, or some- one with access to the rounding program in Figure 17-10, can modify the rounding logic, thus perpetrating a salami fraud, as follows: at the point in the process where the algorithm should increase the current customer’s account (that is, the accumulator value is > þ.01), the program instead adds one cent to another account—the perpetrator’s account. Although the absolute amount of each fraud transaction is small, given the hundreds of thousands of accounts that could be processed, the total amount of the fraud can become significant over time.

Most large public accounting firms have developed special audit software that can detect excessive file activity. In the case of the salami fraud, there would be thousands of entries into the computer criminal’s personal account that the audit software may detect. A clever programmer may funnel these entries through several intermediate accounts in order to disguise this activity. The accounts are then posted to a smaller number of intermediate accounts and finally to the programmer’s personal account. By using many levels of accounts in this way, the activity to any single account is reduced, and the audit software may not detect it. There will be a trail, but it can be complicated. The auditor can also use audit software to detect the existence of unauthorized (dummy) files that contain the intermediate accounts used in such a fraud.

WHITE BOX TESTING TECHNIQUES

To illustrate how application controls are tested, this section describes five computer-assisted audit tools and techniques (CAATTs) approaches: the test data method, base case system evaluation, tracing, integrated test facility, and parallel simulation.

Test Data Method

The test data method is used to establish application integrity by processing specially prepared sets of input data through production applications that are under review. The results of each test are compared to predetermined expectations to obtain an objective assessment of application logic and control effective- ness. The test data technique is illustrated in Figure 17-11. To perform the test data technique, the auditor must obtain a copy of the production version of the application. In addition, test transaction files and test master files must be created. As illustrated in the figure, test transactions may enter the system from magnetic tape, disk, or via an input terminal. Results from the test run will be in the form of routine output reports, transaction listings, and error reports. In addition, the auditor must review the updated master files to determine that account balances have been correctly updated. The test results are then compared with the auditor’s expected results to determine if the application is functioning properly. This comparison may be performed manually or through special computer software.

Figure 17-12 lists selected hypothetical transactions and accounts receivable records that the auditor prepared to test a sales order processing application. The figure also shows an error report of rejected transactions and a listing of the updated accounts receivable master file. Any deviations between the actual results and those the auditor expects may indicate a logic or control problem.

CREATING TEST DATA. Creating test data requires a complete set of valid and invalid transactions. Incomplete test data may fail to explore critical branches of application logic and error checking routines. Test transactions should be designed to test all possible input errors, logical processes, and irregularities.

Gaining knowledge of the application’s internal logic sufficient to create meaningful test data may demand a large investment in time. The efficiency of this task can, however, be improved through careful planning during systems development. The auditor should save for future use the test data used to test program modules during the implementation phase of the SDLC. If the application has undergone no maintenance since its initial implementation, current audit test results should equal the original test results obtained at implementation. If the application has been modified, the auditor can create additional test data that focus on the areas of the program changes.

Base Case System Evaluation

Base case system evaluation (BCSE) is a variant of the test data approach. BCSE tests are conducted with a set of test transactions containing all possible transaction types. These are processed through repeated iterations during systems development testing until consistent and valid results are obtained. These results are the base case. When subsequent changes to the application occur during maintenance, their effects are evaluated by comparing current results with base case results.

Tracing

Another type of the test data technique called tracing performs an electronic walk-through of the applica- tion’s internal logic. The tracing procedure involves three steps:

1. The application under review must undergo a special compilation to activate the trace option.

2. Specific transactions or types of transactions are created as test data.

3. The test data transactions are traced through all processing stages of the program, and a listing is produced of all programmed instructions that were executed during the test.

Figure 17-13 illustrates the tracing process using a portion of the logic for a payroll application. The example shows records from two payroll files—a transaction record showing hours worked and two records from a master file showing pay rates. The trace listing at the bottom of Figure 17-13 identifies the program statements that were executed and the order of execution. Analysis of trace options indicates that Commands 0001 through 0020 were executed. At that point, the application transferred to Command 0060. This occurred because the employee number (the key) of the transaction record did not match the key of the first record in the master file. Then Commands 0010 through 0050 were executed.

Advantages of Test Data Techniques

Test data techniques have three primary advantages. First, they employ through-the-computer testing, thus providing the auditor with explicit evidence concerning application functions. Second, if properly planned, test data runs can be employed with only minimal disruption to the organization’s operations. Third, they require only minimal computer expertise on the part of auditors.

Disadvantages of Test Data Techniques

The primary disadvantage of test data techniques is that auditors rely on the client’s IT personnel to obtain a copy of the production application under test. The audit risk here is that the IT personnel may intentionally or accidentally provide the auditor with the wrong version of the application. Audit evidence collected independently is more reliable than evidence the client supplies. A second disadvantage is that these techniques produce a static picture of application integrity at a single point in time. They do not pro- vide a convenient means for gathering evidence of ongoing application functionality. High cost of implementation is a third disadvantage of test data techniques. The auditor must devote considerable time to understanding program logic and creating test data. The following section shows how automating testing techniques can resolve these problems.

THE INTEGRATED TEST FACILITY

The integrated test facility (ITF) approach is an automated technique that enables the auditor to test an application’s logic and controls during its normal operation. The ITF involves one or more audit modules designed into the application during the systems development process. In addition, ITF databases contain dummy or test master file records integrated among legitimate records. Some firms create a dummy company to which test transactions are posted. During normal operations, test transactions are merged into the input stream of regular (production) transactions and are processed against the files of the dummy company. Figure 17-14 illustrates the ITF concept.

ITF audit modules are designed to discriminate between ITF transactions and production data. This may be accomplished in a number of ways. One of the simplest and most commonly used is to assign a unique range of key values exclusively to ITF transactions. For example, in a sales order processing

system, account numbers between 2000 and 2100 are reserved for ITF transactions and will not be assigned to actual customer accounts. By segregating ITF transactions from legitimate transactions in this way, ITF test data do not corrupt routine reports that the application produces. Test results are produced separately in digital or hard-copy form and distributed directly to the auditor. Just as with the test data techniques, the auditor analyzes ITF results against expected results.

Advantages of ITF

The ITF technique has two advantages over test data techniques. First, ITF supports ongoing monitoring of controls as SAS 78/COSO recommends. Second, ITF-enhanced applications can be economically tested without disrupting the user’s operations and without the intervention of computer services person- nel. Thus, ITF improves the efficiency of the audit and increases the reliability of the audit evidence gathered.

Disadvantages of ITF

The primary disadvantage of ITF is the potential for corrupting data files with test data that may end up in the financial reporting process. Steps must be taken to ensure that ITF test transactions do not materially affect financial statements by being improperly aggregated with legitimate transactions. This problem can be remedied in two ways: (1) adjusting entries may be processed to remove the effects of ITF from general ledger account balances or (2) data files can be scanned by special software that remove the ITF transactions.

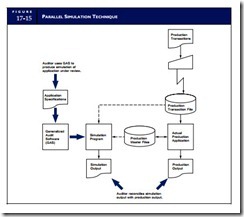

PARALLEL SIMULATION

Parallel simulation involves creating a program that simulates key features or processes of the application under review. The simulated application is then used to reprocess transactions that the production application previously processed. This technique is illustrated in Figure 17-15. The results obtained from the simulation are reconciled with the results of the original production run to determine if application processes and controls are functioning correctly.

Creating a Simulation Program

Simulation packages are commercially available and are sometimes a feature of generalized audit soft- ware (GAS).2 The steps involved in performing parallel simulation testing are outlined in the following section.

1. The auditor must first gain a thorough understanding of the application under review. Complete and current documentation of the application is required to construct an accurate simulation.

2. The auditor must then identify those processes and controls in the application that are critical to the audit. These are the processes to be simulated.

3. The auditor creates the simulation using a fourth-generation language or generalized audit software.

4. The auditor runs the simulation program using selected production transactions and master files to produce a set of results.

5. Finally, the auditor evaluates and reconciles the test results with the production results produced in a previous run.

Simulation programs are usually less complex than the production applications they represent. Because simulations contain only the application processes, calculations, and controls relevant to specific audit objectives, the auditor must carefully evaluate differences between test results and production results. Differences in output results occur for two reasons: (1) the inherent crudeness of the simulation program and (2) real deficiencies in the application’s processes or controls, which the simulation program makes apparent.

Comments

Post a Comment